Solve problems in seconds with the only full-stack, analytics-powered and OpenTelemetry-native observability solution. Splunk supports log analytics & end-to-end observability. We believe that our approach to privacy is vital to earning and retaining the trust of our customers – and the bedrock of our future success as a data-driven location platform.įor more information on how data privacy is of fundamental importance to HERE and our customers, see the HERE Privacy Charter.įor more information on data security and durability best practices, see the Data API. As you can see, logs form the basis for many business operations. We strive to go beyond mere regulatory compliance and make privacy an integral part of our corporate culture. We employ privacy by design in services we develop. And we promote pseudonymity for data subjects wherever a service does not require personal information to function. We practice data minimization and don’t collect data we don’t need. Terms and conditionsįor the terms and conditions covering this documentation, see the HERE Documentation License.ĭata privacy is of fundamental importance to HERE and our customers.

Official documentationįor documentation regarding Grafana, see the official Grafana Documentation v8.3.įor documentation regarding Prometheus, see the official Prometheus Documentation. In order to monitor all regions of a multiregion catalog, dashboards will need to be manually duplicated accross all Grafana regions.

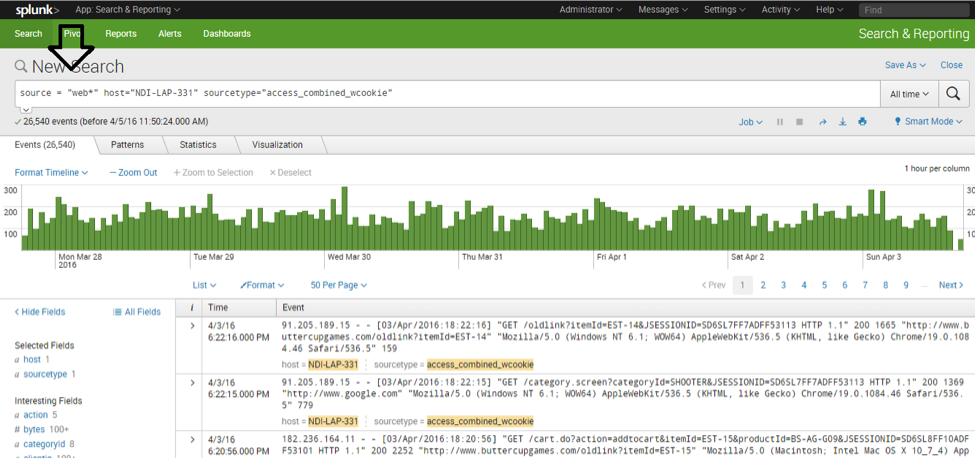

This applies to multiregion catalogs as well. Metrics displayed on those dashboards will belong only to that region. However, there is one Grafana per region. The Splunk tool is global, meaning that the logs coming from all regions will be shown in the results. This means that Grafana will not be able to access data over 30 days old. This is really confusing the heck out of me and making me question if there is a better way.Prometheus only retains data from the previous 30 days. When I spot check some hostnames/IP addresses from the asset inventory spreadsheet from IT in Splunk, there are some that return no results, some that is just DNS or FW traffic from that server (so needs onboarding to get server logs) but others where I get results where the ‘host’ field is a cloud appliance (like Meraki) and the hostname or IP matches to other fields such as ‘dvc_up’, ‘deviceName’ or ‘dvc’ fields. Obviously I am second guessing myself because of the delta. The inventory has about ~850 line items in total which are supposedly onboarded and I saw logs from about ~250. I took the ‘hosts’ column (which was a combination of hostnames and IP addresses) from the export and did a diff against the IT managers list of hostnames/IP addresses and where it wasn’t found, presumed it had not sent logs during that time period. Over a 30-day search I run | metadata type=hosts index=* and I exported the results to a csv. At first I thought I could run a search to just compare his list with logs received by hostname but I can’t figure that out. The IT manager gave me a spreadsheet of hostnames and private IP addresses for all the devices which are forwarding logs.

There isn’t a Splunk resource in the company so I am trying my best to figure it as I go. I’m trying to verify that they are in fact sending logs into the Splunk index so that I can eventually apply use cases and alerting on the logs as well as troubleshoot those hosts which aren’t sending but are supposed to be. The company IT has onboarded a lot of AWS, Azure, on-prem and network devices so far.

#Splunk logs meaning professional

I am relatively new to a company that has used Splunk Professional Services to spin up a Splunk Cloud environment.

0 kommentar(er)

0 kommentar(er)